Algorithmic music composition has always had a place in computing. One of the first applications Ada Lovelace proposed for the Analytic Engine almost 200 years ago was the generation of music compositions. 200 years has seen a lot of progress in this area but has also reiterated something musicians have always known… writing music is hard.

While there has been a lot written about the challenges of emulating or producing creativity with machines, the focus of this post is on a more specific issue: music as data.

How do we represent music as data?

How does the form of this data influence algorithm design and adoption?

What have we learned about using music in machine learning systems?

As neural networks are used in much of the state-of-the-art machine learning with music going on at the moment (and are a major part of my own research in music) I’ll also be talking about some practical aspects of using music data with neural networks.

To get things started, let’s look at some of the definitive properties of music.

Music is not a language

This is no longer a particularly controversial observation. Music has a communicative role and there are common music patterns used both within and between cultural groups but music is not semantic. Musical notes don’t ‘mean’ a specific thing.

I find it useful to include this in a (slightly more controversial) working definition of music: “Attended, non-semantic properties of organised sound.”.

This definition covers a few important ideas:

- Both music and non-music content can exist within a single sound signal: A piece can have music and lyrics.

- Attending properties of a sound purely to ascertain semantic meaning makes it a sonification, not music: If you are listening to a sound in your office only to tell if it is your phone ringing then it isn’t music.

- Any organised sound can be attended for non-semantic properties: If you are paying attention to the sound of your ringtone, not just to know if it is your friend calling, but for its sonic shape, textural quality or rhythm etc. then it is musical.

The implications of a music-language separation are interesting from a cultural and evolutionary biology perspective, but also very important for data scientists.

Firstly, it means that natural language models probably aren’t going to be very effective ‘out-of-the-box’ tools for music analysis and generation. Both the function and structure of data are very different.

Semantics and syntax

A breakthrough in neural language processing models came from word2vec, a neural network model that learns word embeddings of distributional semantics. These embeddings are ways of representing words as multi-dimensional vectors. Through training the neural network, words with similar contexts in the training corpus are positioned with relative closeness in the vector space. This means that words with similar semantic meaning tend to cluster together.

No semantics means there is no need for syntax, and syntax is the friend of the data scientist.

Semantics and syntax involve a significant gift for those working with natural languages - words. Transcribing speech to text is lossy. Subtle inflections, dialect and accent are lost, but there is a way to symbolise speech into discrete tokens that captures a significant portion of its meaning. Even in poetry, where standard ‘rules’ of syntax are bent or intentionally broken, there is still meaning behind each word, established, in part, by the social contract of language. A contract that gives some common properties of interpretation among individuals.

There are no words in music. Notes are a vague concept at best - when does a note begin or end? Is a slide on a trombone one note, two notes or thousands of step notes? How about drone music or glitch? No sequence of notes makes more or less ‘sense’ than others.

This means that overall, machine learning systems have a big upper hand when used for natural language processing rather than music. The structure of the data they are used to analyse has been developed for the function of establishing shared meaning across large populations. Thousands of years of optimisation has established the structure of spoken languages to balance expressiveness with generalisability and ease of learning. Languages work because they are shareable and learnable and part of their learnability comes from rules (syntax).

While it could be argued that music is designed to be enjoyed, and taste is (at least to some degree) learned, there is no requirement for multiple people to extract the same meaning from a piece of music. Not ‘understanding’ a piece of music probably hasn’t resulted in as many deaths as verbal misunderstandings or misread labels - so music happily goes on without the burden of syntax. Music may have a specific evolutionary function, but if it does, it appears to be operating at a much slower rate.

A lack of semantic meaning does not mean there is no room for abstraction. There are structural properties of music that we can extract, isolate and recombine with apparent ease. The 3am Karaoke rendition of ‘Shake if Off’ still sounds like Taylor Swift’s hit song, even when your drunk friend at the microphone doesn’t know the words and the backing track was recorded on a 1980s casio keyboard. There is also that strange feeling you get when the bass player in your local high school rock band decides to sit on a Gb underneath the F power chord the guitarist is approximating. We just can’t establish explainable grammar-type rules for how these abstractions fit together to make ‘good’ music.

There are musical patterns we like and patterns we don’t - and patterns we find generally musical and some we don’t. These distinctions are subjective but there is some commonality among different people.

Repetition

Most people like to hear repetition in music. A lack of repetition makes music hard to follow. Repetition is good within a piece of music. People also like to listen to the same track multiple times. We are much more welcoming of repetition in music than we are in any other communication or creative expression including writing. We are much more welcoming of repetition in music than we are in any other communication or creative expression including writing.

Variation

Most people like to hear variation in music. Too much repetition can make music boring.

Symbolic music data

If you are using a large, publicly available midi (or MusicXML) collection for music analysis it is probably very wrong. I don’t just mean from the inherent data loss from representing continuous audio signals in discreet packets. Most transcriptions are done by fans, or extracted from books designed for beginner musicians and are either intentionally simplified or just incorrect.

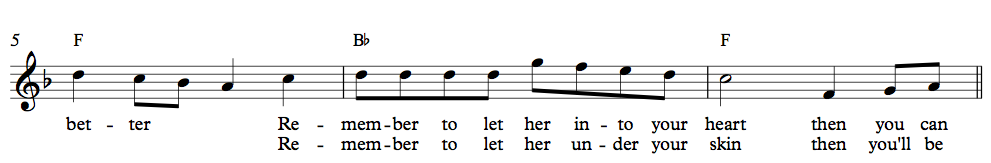

The only way to recognise which pop song (from one popular training set) this score comes from is the lyrics. The melodic line has little similarity to the original recording.

The well known issues with midi/xml datasets has contributed to the heavy use of classical music in machine learning systems. Classical music has survived as musical scores written on paper - so discretised versions are our primary source. There is also a general music snobbery within the wider academic community that sees western art music of the 17th-19th centuries as a ‘higher’ or more ‘cerebral’ form of music which has added to the overrepresentation of classical music (more on this to come). Even with classical music though, scores are typically first converted to sequential data to aid the training of machine learning systems.

Sequential representations are problematic because music is typically polyphonic. Multiple voices contribute to an arrangement by entering and exiting sections at different times. Is a sequence of chords multiple melodic lines moving together or a single melodic line with harmonies added to support it. Ask a pianist and they will probably tell you that the way they think about harmony depends on context.

A recent and very successful towards addressing this was carried out by Gaëtan Hadjeres and team. DeepBach is an application of multiple neural networks, some using recurrent LSTM units and some using traditional feed-forward architectures. DeepBach feeds two LSTM recurrent neural networks with past and future note information respectively and a deep neural network that takes in the notes being played on the current beat. The three networks feed their output to another neural network that predicts the next note for a given voice. The system was trained on four-part chorales composed by Bach and can be used as an inference model to produce new compositions or re-harmonise melodies in a style convincingly similar to what Bach himself might have done, as attested by listener studies carried out with music experts. It does however assume that there are four parallel voices.

More recently, there has been a debate within the machine learning community about the best models to use for sequential data. Until 2017, recurrent neural networks were immensely popular in analysing and generating music. DeepMind then dropped WaveNet, a fully convolutional neural network that could generate impressively realistic speech and piano sounds. Wavenet is very slow - but it also deals with raw audio data, and importantly, doesn’t use recurrent networks at all. RNNs were declared dead by some. Research also supported a re-evaluation of the use of RNNs for sequential data.

Audio data

An audio CD has 44,100 samples per second. Even if we reduce this to 16,000 samples it sounds like it is being played over a phone. Anything below 1000 is barely recognisable. This is a huge amount of information. For a machine learning system to learn meaningful things about the structure of music compositions as a whole it would need to remember sequences of samples millions of steps apart.

DeepMind have continued to improve their WaveNet model, and Baidu have introduced their DeepSpeech model - both of which produce highly realistic speech. The music generated by wavenet clearly sounds like a piano, but lacks compositional structure that most people might be able to follow. I suspect a significant architectural change will be needed for music for reasons discussed in this article.

Music is deemed to be a creative outcome derived from effort and skill. As such, most music recordings are sold - especially the ones that people like. Unlike our words that we are happy to give away to any company that gives us a free email account, we don’t give away music easily. I suspect we will see many of advances in audio-data based applications of machine learning in music analysis and generation coming from the companies that have access to very large datasets - although there is good work coming from the academic community in this area too.

Evaluation

Most people can’t tell you why they like the music that they like. Not with enough resolution to accurately predict their preference for new tracks. Without syntax to dictate, or guide, the evaluation of the ‘correctness’ of structure, music evaluation is ultimately subjective. People don’t agree on the mood of pieces of music, the quality of pieces of music, the classification of music into styles or the function of music. We have tried to deal with this using statistics. How do most people classify this track? What mood descriptors do most people use to describe this track?

The reality is that any training set large enough for current neural network models of music analysis or generation is going to be predominantly made up of music that most people, mostly don’t want to listen to - so what can we expect from user evaluations of music generated using these models?

Cultural bias

Most people like music. Every culture in the world has been observed to contain some form of music practice. This might sound obvious, but the same can’t be said for writing, literal drawing or counting. Unfortunately however, the way that music is typically symbolised for most neural music applications is heavily biased towards western art music of the last few centuries. Most music listener studies used in music psychology only use western classical music.

I think there is still a lot of interesting things to be done with symbolic representations of music. My own research is primarily concerned with symbolic forms of music and machine learning. There is however some significant effort needed in the music machine learning community (as well as the broader computer music community) to engage with ethnomusicologists, composers and diverse performing musicians to advance our understanding of the structural qualities of music. How people think about music when they write, perform and listen to it is fascinating. If machine learning is going to support music practice, it needs a strong foundation in the wider, human aspects of its expression.

Looking forward

Today I’ve introduced some of the major challenges in analysing and generating music with machine learning at a high level. Over the next few months I’ll be talking about my own research and other exciting things happening in this area and get into some more technical details and hands-on examples.

About the Author

|

Patrick is a Composer and AI researcher. He is a member of the Creative AI team at SensiLab and composes for film, games and music projects. Follow Patrick on twitter |

|---|---|