Can you teach a robot to draw? We normally think of drawing as an (almost) exclusively human activity. While other animals, such as chimpanzees, can be trained to paint or draw, drawing isn’t something that is regularly observed in animals other than humans.

At SensiLab, we have been working for several years on making robots designed explicitly to create their own drawings. These drawing robots, or drawbots, are relatively simple, insect-like machines that often work cooperatively as swarms and exhibit common aspects of collective behaviour and emergent intelligence.

The idea behind this research is to explore the concept of post-anthropocentric creativity. We want to understand what art made by an autonomous, non-human intelligence might look like, and if artificial systems can exhibit what we recognise as their own creative behaviour.

Beginnings: Drawbot V1

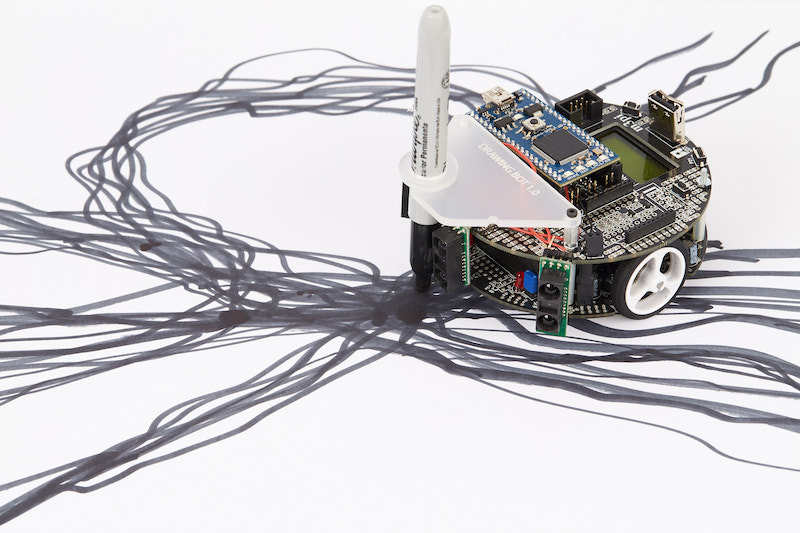

In our earliest experiments, we modified the Pololu m3pi robot to allow it to draw, adding a pen holder at the rear, along with sensors to avoid collisions. The robot left a trail of ink behind it as it moved around, but couldn’t lift the pen from the paper, which meant it had to draw continuously while the robot was operating.

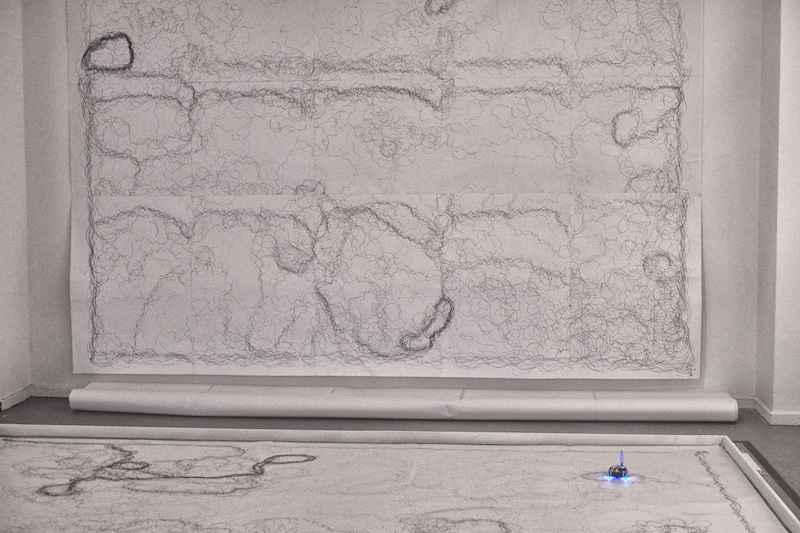

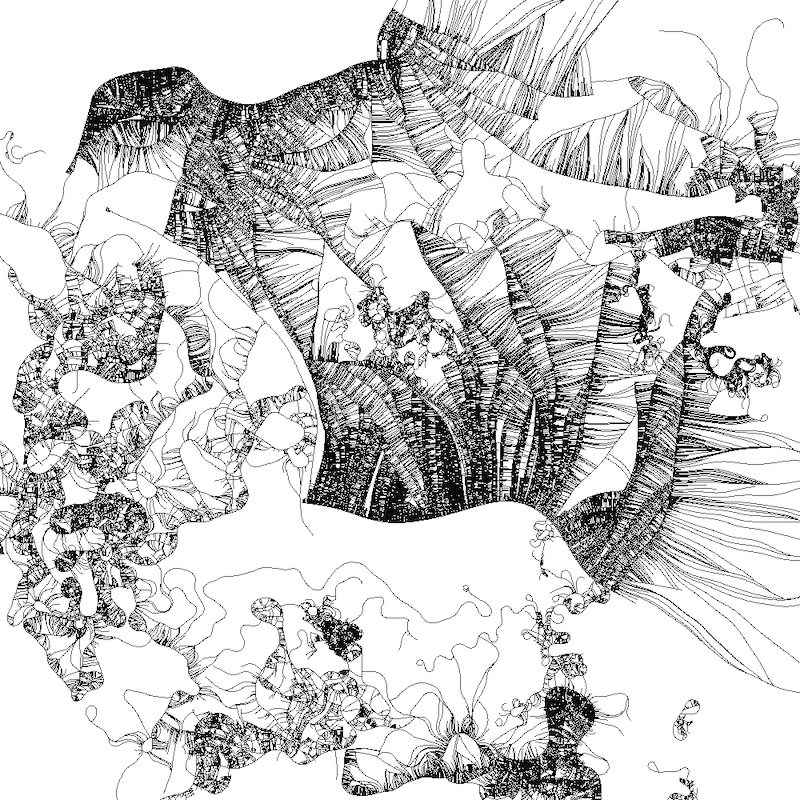

These robots were commissioned by Luc Steels for an exhibition that was part of the International Joint Conference on Artificial Intelligence (IJCAI) in 2015. Robots drew on a 4m x 4m ‘drawing pen’, taking 4-6 hours to create a single drawing. Over the course of the exhibition many drawings were created. People not only found the drawings interesting, but were fascinated by the drawing process itself. Seeing machines create, seemingly with intention and purpose, is not something that we are yet used to seeing.

Algorithms for Drawing Robots

Having the hardware for autonomous drawing is only the start, the next question is: what does it mean to draw for a non-human entity? Many objects and machines can make marks and interesting patterns 1, but what differentiates mark making from drawing?

People have designed many beautiful and interesting ‘drawing machines’, a classic example being the harmonograph. Certainly machines like these make interesting and beautiful drawings (and the machines themselves are often works of art), but the drawing repertoire is determined only by the mechanical and basic physical process of the pendulum. The harmonograph lacks its own decision-making autonomy: how to draw, what to draw, when to draw, and when not to draw. There is no form of evaluation or response to what is drawn either.

Our work was inspired by several pioneering experiments in drawing robotics. These include works by the artist Leonel Moura, who for many years has been building autonomous, art-making robots. Moura celebrates the use of autonomous machines to create art-objects “indifferent to concerns about representation, essence or purpose.” 2

Another seminal research project was the Drawbots Project, undertaken by artists, philosophers and scientists at the University of Sussex: “In 2005 an international, multi-disciplinary, inter-institutional group of researchers began a three-year research project that is attempting to use evolutionary and adaptive systems methodology to make an embodied robot that can exhibit creative behaviour by making marks or drawing”.

The project was interesting because it wanted to remove any human artistic ‘signature’ in the resultant images: any creativity should emerge through an evolutionary process, not be directed by the designers’ choice of specific drawing algorithms.

While the project never really reached its ultimate goal of a fully autonomous robot artist, it did investigate a number of interesting philosophical and technical questions about autonomy, authenticity and intention in an artificial creative practice 3.

Niche Construction

My own experiments were inspired by the biological process of Niche Construction, where organisms seek to change their heritable environment. Proponents of niche construction argue that it acts as a feedback process for natural selection and plays an important role in evolution.

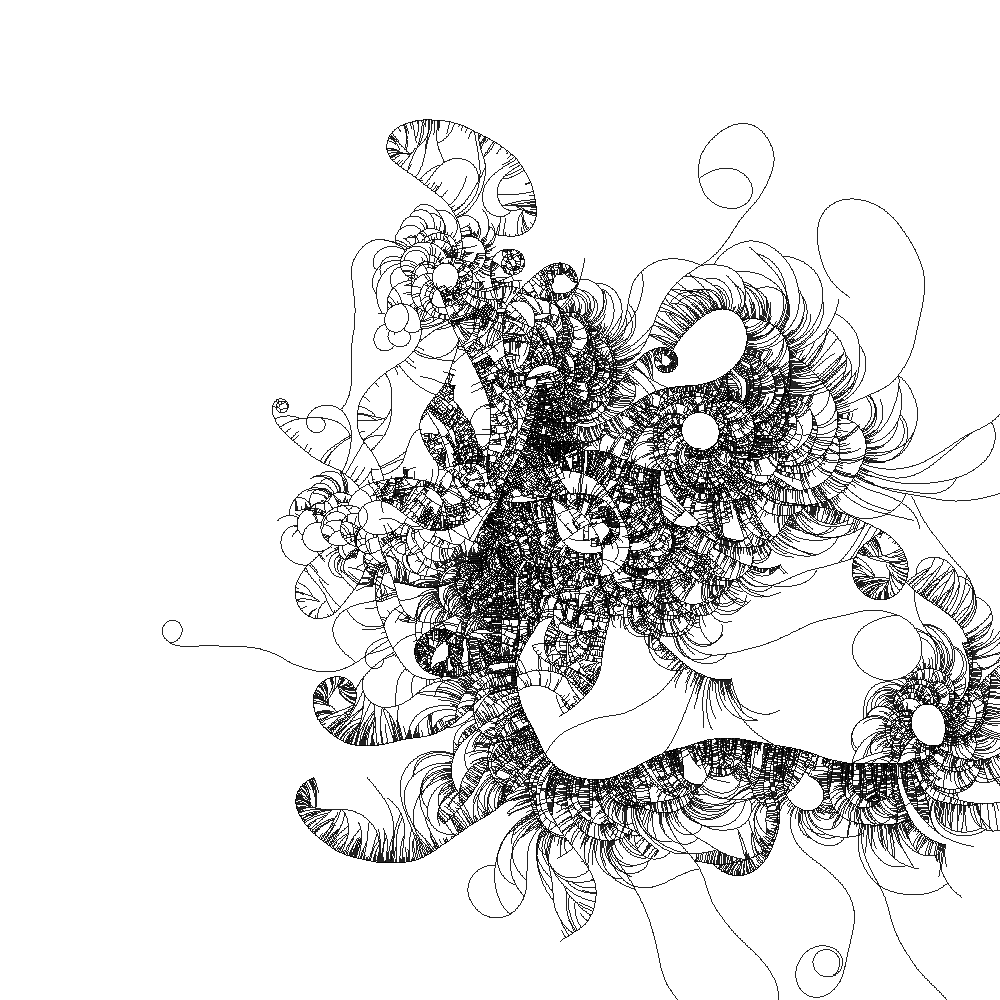

I had been experimenting with niche constructing agents for some time, having developed a software-based system that used line density as the environmental condition that was constructed by a swarm of software drawing agents.

In this system, each agent has a genetically evolving preference for a specific line density. Some like low density (just a few lines nearby), others prefer high density. In regions of preferred density agents are more likely to reproduce, and their offspring typically inherit a similar density preference to their parents.

What results are drawings that have significant variation in density. The role niche construction plays can be tested statistically, by running simulations with it off and on, comparing the variation in image density over a large number of runs. More details can be found in this paper I presented at the Artificial Life 12 conference in 2010.

Evolution and Robots

While it’s easy to make software agents that evolve in simulation, allowing robots to evolve physically presents numerous difficulties. The main one is that it is difficult to make autonomously self-reproducing machines, although there have been many notable attempts, such as this early one by Lionel Penrose (father of Roger Penrose).

The University of Sussex DrawBots project relied on evolutionary simulation in software and then downloading the resulting code into the robots. For our experiments we use a user setting of the density niche, via a small potentiometer at the base of the robot.

The robot measured the average density of lines drawn underneath it and tried to reinforce existing lines until they matched the robot’s specific niche preference. If the density exceeded the preference the robot would move away to find a more suitable area in which to draw. As the robot could not lift the pen from the paper, it was always drawing, so over time the canvas gets more and more lines and a robot with a low density preference has difficulty in finding its niche.

Drawbot V2

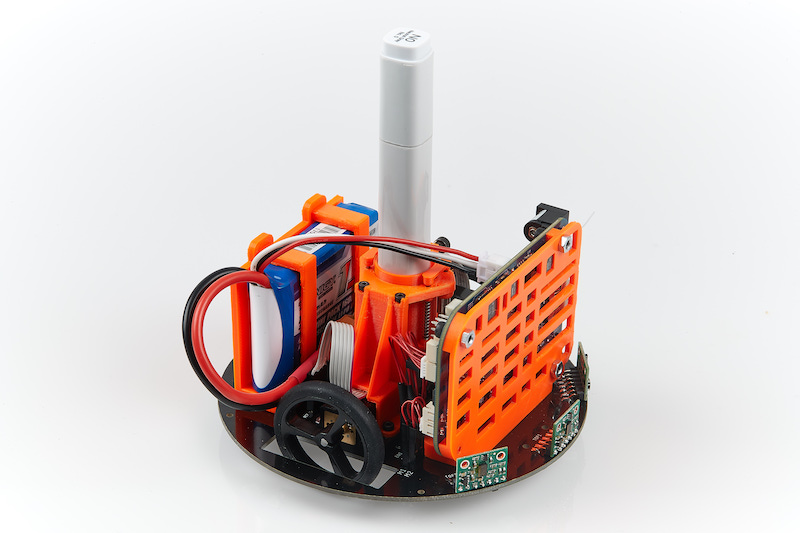

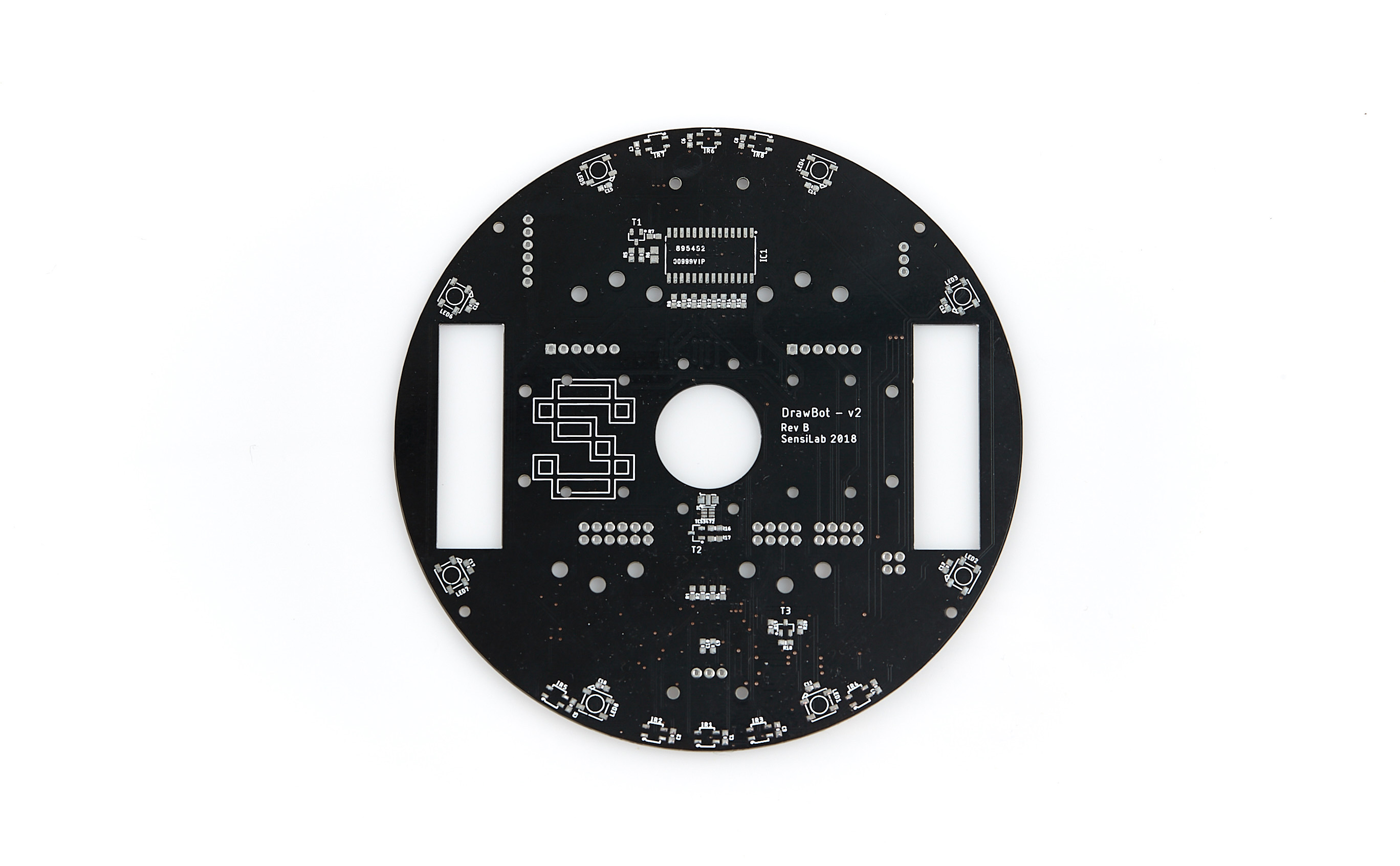

To address some of the issues with the modified m3pi robots, we decided to design a drawing robot from scratch. SensiLab has all the necessary design and technical expertise to do this in-house. This formed the basis of our Version 2 (V2) drawing robot.

The ‘brain’ in our V2 robot is the Beaglebone Blue. This board is great for robotics applications as it includes controllers for motors and servos, UARTS, LiPo battery charging and an Octavo Systems OSD3358 SOC, which has an ARM Cortex A8 processor running at 1GHz and 2 x 200MHz programable real-time units (PRUs). It is also capable of running a variety of different operating systems, including different Linux flavours and ROS. The board also includes WiFi and Bluetooth modules for easy wireless communication.

Our robot design uses a circular board with the pen placed in the centre, along with a two wheel design with ball stabiliser. A servo mechanism allows the robot to raise and lower the pen and a spring-based suspension system keeps the pen in contact with the paper, but allows some movement over bumps and uneven surfaces. A big issue in running drawing robots for extended periods over large paper surfaces is in dealing with wearing of the pen tip. Additionally, creases or bumps in the paper tend to appear over time and cause the robot to become stuck or for the pen to tear the paper. The suspension mechanism greatly helps overcome these problems.

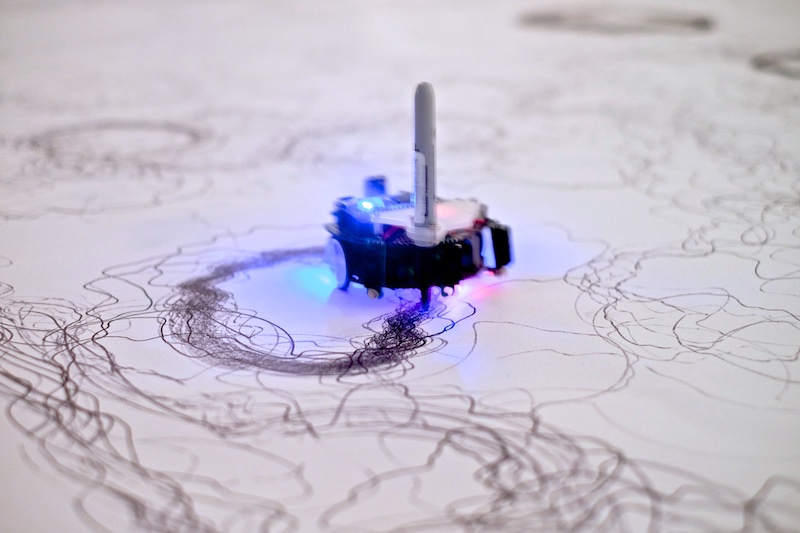

The V2 robot includes 3 front facing Lidar range finding sensors, 8 QTR reflectance sensors (for line detection), a TCS34725 colour sensor for detecting the colour of lines drawn directly underneath the robot and some NeoPixel LEDs for lighting the drawing surface and added bling.

We used 2 low power micro metal gearmotors (75:1 gear ratio) with magnetic encoders attached to keep track of wheel turns. The relatively high gear ratio gives the robot enough torque to move effectively, even uphill or over rough surfaces. At maximum speed it can get close to moving at around 1m/sec.

A small OLED screen is used for diagnostic and information purposes and the whole system is powered by a 1600 mA/hr LiPo battery, which gives between 1-5 hours running time, depending on load and activity.

We designed a custom circuit board for the sensors, motors, servos and control logic, which also serves as the base of the robot. 3D-printed mounts for the pen mechanism, battery and Beaglebone board are attached to the base board, allowing easy repairs and exchange if a part fails.

The robot aesthetic is largely functional — its structure designed to physically support the hardware and keep the build complexity at a manageable level.

Daemons in the Machine Exhibition

A swarm 4 of the V2 drawing robots was commissioned by LABORATORIA Art&Science Foundation in Moscow and exhibited as part of the Demons in the Machine exhibition at the Moscow Museum of Modern Art in October 2018.

Similar to the IJCAI exhibition, the robots roam around a large ‘drawing pen’ (in this case about 3m x 3m) within which is a large sheet of paper. The drawing is complete when the robots stop drawing completely or run out of power.

In this exhibition each robot had its behaviour determined by the colour of the pen it was drawing with and its individual density preference. Each robot attempts to seek lines made by robots with the same colour and to draw around them until they reach the preferred density. They must also avoid obstacles and each other, which adds an emergent stigmergy between robots.

V3 Drawbots

Running the V2 drawbot for an exhibition period of 2 months taught us a lot about how to improve the V2 design to make it more robust and run well for extended periods. We are currently working on a third iteration (V3), which we hope to make available publicly as a hardware platform for drawing experiments. Having a reliable robot that is custom designed for drawing and has enough sensors and actuators allows you to dive deeper into the programming aspects of drawing robot development. Keep an eye out on ai.sensilab for further details.

Minimal Creativity?

You might be wondering how creative these drawing robots are? They do satisfy the criteria I listed earlier for having the potential to exhibit creativity: capable of autonomous decision making based on interaction with the drawing and others, including the ability to decide if they should draw or not. They also perform a simple form of evaluation by looking at the colour and density of lines drawn in their immediate vicinity and make responses based on that.

But is what they do really creative, and is it art? Each drawing is different, yet there is an overall stylistic consistency. My thinking is that they display some minimal creativity, just like we might sense creative behaviour in insects or birds 5. While this is not Art in the human sense, it does indicate some minimal form of creativity.

Papers: McCormack J. (2017) Niche Constructing Drawing Robots. In: Correia J., Ciesielski V., Liapis A. (eds) Computational Intelligence in Music, Sound, Art and Design. EvoMUSART 2017. Lecture Notes in Computer Science, vol 10198. Springer, Cham

Acknowledgements

Elliott Wilson developed the Drawbot V2 hardware design and build. This research was supported by an ARC Future Fellowship FT170100033.

Footnotes

[1] Natural systems and other animals also make many interesting patterns, but its important to distinguish between mark making and pattern formation with intention rather than as a side effect of movement for example.

[2] See his book, Moura, L and H. G. Pereira, Man + Robots | Symbiotic Art, LxXL, 2014

[3] For details see the book by Boden, Creativity and Art: Three Roads to Surprise, Oxford University Press, 2010

[4] There are 8 robots in the current swarm.

[5] For example, as observed in Bowerbird nests.

About the Author

|

Jon McCormack is an Australian-based artist and researcher in computing. His research interests include generative art, design and music, evolutionary systems, computer creativity, visualisation, virtual reality, interaction design, physical computing, machine learning, L-systems and developmental models. Jon is the founder and Director of SensiLab and oversees all operations, research programs and partnerships. He is also Professor of Computer Science at Monash University’s Faculty of Information Technology and currently an ARC Future Fellow. Follow Jon on twitter |

|---|---|